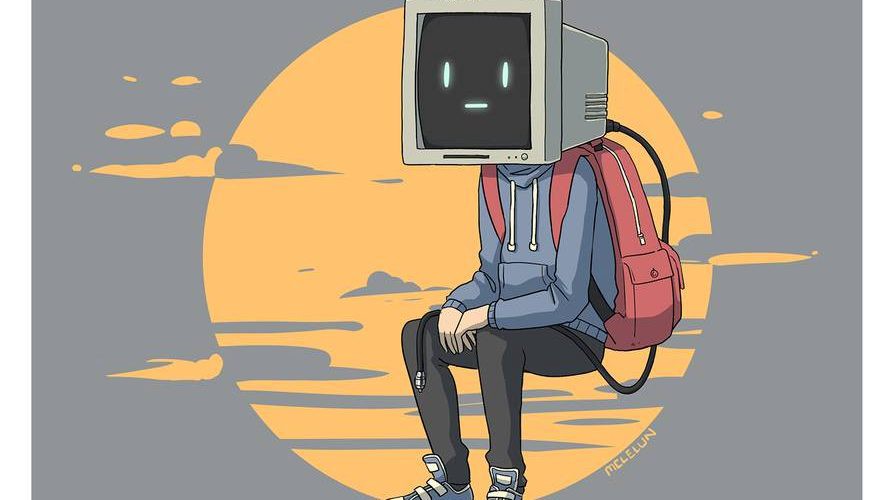

Over the last few years, technology and especially AI have been advancing drastically, to the point where many start to think we are close to the technological singularity — when hyper-intelligent AI will essentially grow so rapidly, it will take over human civilisation as we know it. I however would like to argue that even if AI can be more intelligent than humans in some specific aspects, it will never be able to achieve something core to the great thinkers of our species — philosophy.

What is it to philosophise?

To ask what philosophy is, is famously a philosophical question in and of itself. Broadly speaking, it is the study of very fundamental questions about life, reality and meaning. For example, whereas scientists and social scientists ask about the causes of physical, chemical, historical, political or economic events, philosophers ask what causes are and whether there are any such things. Similarly, while all academic disciplines aim at knowledge of their fields, philosophers ask what knowledge is and whether we can know anything.

It might sound ridiculously pedantic, but philosophy allows us to understand the world better and by extension, make better decisions and achieve more as a species. I can guarantee that every self-made billionaire or world-leader out there either has a background in philosophy or otherwise understands the use of philosophical grappling.

So how does one actually go about philosophising? Usually one guy comes up with an idea (e.g. free will is an illusion), fleshes out his argument then publishes a paper. Subsequently some other guy reads it, and comes up with his own counterargument (e.g. our minds are not of this world), fleshes out and publishes. Another guy, or even the original guy would then think up some other counter (e.g. this world interacts directly with our minds), flesh out and publish. This process continues indefinitely as there is very rarely an end to a philosophical debate.

But now I want to drill down into how exactly one “comes up” with a counterargument. Because fleshing out and publishing is simple and follows naturally from the aforementioned. The thing is, there are at least infinite ways of countering an argument, however at the end of the day this is what happens, either explicitly, or implicitly: you break down an argument into its components, and either critique a premise or the argument’s validity. Let’s take an example with the free-will-illusion argument:

- The world is deterministic.

- Our minds are of this world.

- Therefore, our minds are deterministic.

One method of countering this argument would be to show that e.g. the second premise is false. What if our minds are not of this world? Descartes has a famous view called dualism, which holds that a person consists of a physical body and an immaterial mind, which interact to execute everyday human activities. If an argument has a false premise, it is no longer sound, so this would be a valid counterargument. But how the hell does one have that thought: “minds are of immaterial substance”? My guess is it’s one of the following:

- Descartes was dating a girl that was very into yoga (yes, yoga existed in his time). One day he went to pick her up at the studio and heard the instructor speak about how it’s important to focus on both “body and mind” as they are not one-in-the-same.

- An adventurous friend of Descartes brought back some psilocybin (modernly known as mushrooms) from his travels in Central America. When taking the drug, known for its dissociative effects, Descartes experiences his mind disconnecting from his body.

- While on holiday at a professor colleague’s farm, Descartes decides to mount a horse and take it for a ride. In a freak accident, he falls to the ground and has a near-death experience, commenly known to induce out-of-body experiences.

The point I’m trying to get at, and why I believe philosophising is a uniquely human thing, is that to in order to have relevant thoughts, one has to have lived a life. All your ideas come from past experiences. The brain is fantastic at making associasions (it’s how memory works — you remember something by virtue of something relevant that triggers that memory). When you see the premise “our minds are of this world” your brain immediately makes connections to relevant things — human psychology, relationships, society, the pursuit of happiness, or the nature of the world, the continents, weather systems, natural phenomena, or if you have studied the works of Descartes — the notion of Dualism. And BAM! There’s your counterargument.

Just listen to a philosopher speak, or read a philosophical piece of literature. Their arguments are absolutely littered with personal anecdotes and arguments by analogies. Many popular philosophical ideas wouldn’t exist, or at least not as prominently or usefully as they do without analogies or thought experiments from life. Great examples of this are Frank Jackson’s Mary’s room, Peter Singer’s drowning child, John Searle’s Chinese room, the ship of Theseus, the infinite monkey-typewriter theorem, and the infamous trolley problem. All these ideas require the originator has lived a life.

Why not computers?

Lack of connections

The whole connection-network I described above is very important. No one knows how the brain does it, but for every memory in your life, it is connected to a whole host of relevant things, such that it can be looked up by that relevant thing. For instance, take a horse-riding accident during your holiday. In your mind it will be linked to everything prominent you experienced during and as a direct result of the event: horses, saddles, farms, vacations, back pain, hospital beds and so on. Next time you think about saddles you’ll be sure to remember the accident.

There is no feasible way for a computer to structurally build this kind of network. A computer would need to generate a set of 1000 tags on every 10-second event it records. These tags would also need to be indexed immensely efficiently so as to retrieve them extremely quickly, and at literally any time. And even then when it is time to “recall” something relevant, how does the computer determine what is relevant of the googolplex+ different events that match the tag? And what if that specific relevant event needed to make a counterargument just doesn’t have the right tag — the 1000 wasn’t enough. How much would it need? These are all hard-hitting questions computers simply cannot answer.

And even if it could somehow make those connections, there is no way a computer can do such a complex thing as devise a thought experiment that accurately shows why their argument holds. It has no basis in life it can use to think of relevant situations. And beyond that, the thought experiment must be convincing in that it must agree with intuitions. If you say for instance the trolley problem is an ethical dilemma, it must be obvious why. This is an extremely tall order for a troupe of bytes and circuits.

Lack of initiative

Coming from a CS background I can tell you Machine Learning (the ancestor of AI) as it currently is, very much works in an input-output kind of way. You give it a set of inputs (an image, for instance) and it gives you a set of outputs (tags, for instance). The problem with this way of doing things is it does not allow for something needed for philosophising — initiative. To have such a wild thought as e.g. Descartes did when he thought up Dualism, you need to think it up as a result of living life. When Descartes had his horse-riding accident or whatever, he thought a purely original thought of his own free volition due to what was going on around him and what experiences he has had before. A structered input-process-output paradigm like in computation, cannot and will never be able to do this. The requirements simply do not fit the computation model.

Why not AI?

A true AI (a theorised one that has thoughts, feelings, emotions and the capability of truly intelligent interaction with the world) is identical to a person, so naturally it too would be able to philosophise. Although you must remember a true AI (or AGI) will not by strict definition be a computer. A computer is defined as a machine that can interpret and carry out instructions automatically using the input-process-output model. For reasons described above, an AGI simply does not fit in this model.

I only argue that computers (and things derived from it) cannot philosophise, but make no claim as to other entities. Hell, I bet there’s an owl out there that has looked at the sunset and thought about where the sun, the universe and everything came from. That’s an animal philosophising.

So all in all, philosophy is exclusively a meat-based experience. At least until SkyNet goes live.

Image credit: mclelun